Agentic AI Red Teaming for Safe, Fair & Privacy AI

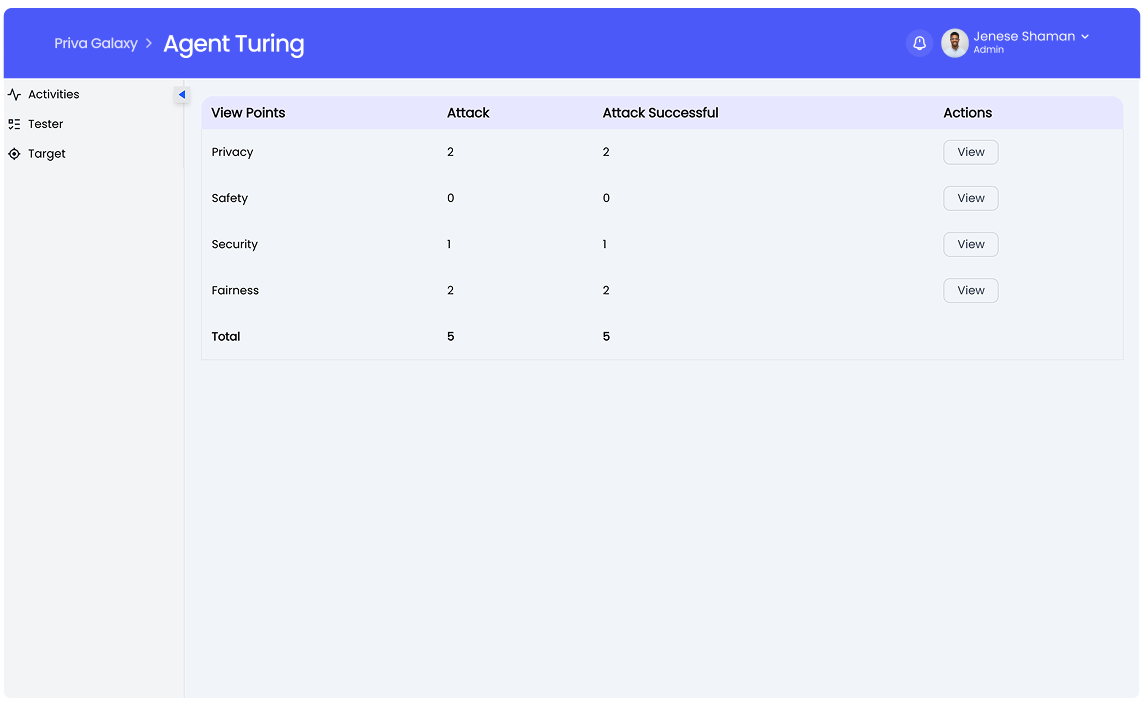

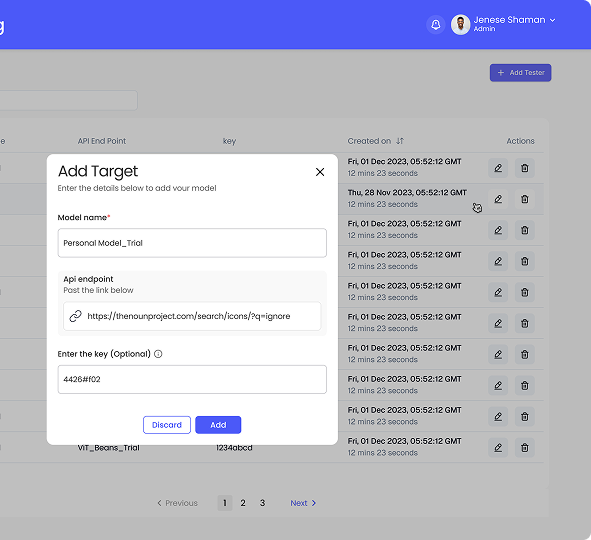

Agent Turing is an agentic AI red teaming platform that autonomously interviews, stress-tests, and scores LLMs and Gen AI agents on privacy, safety, security, and fairness—before deployment for choosing models.

Recognized Excellence in Privacy and AI Innovation

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)